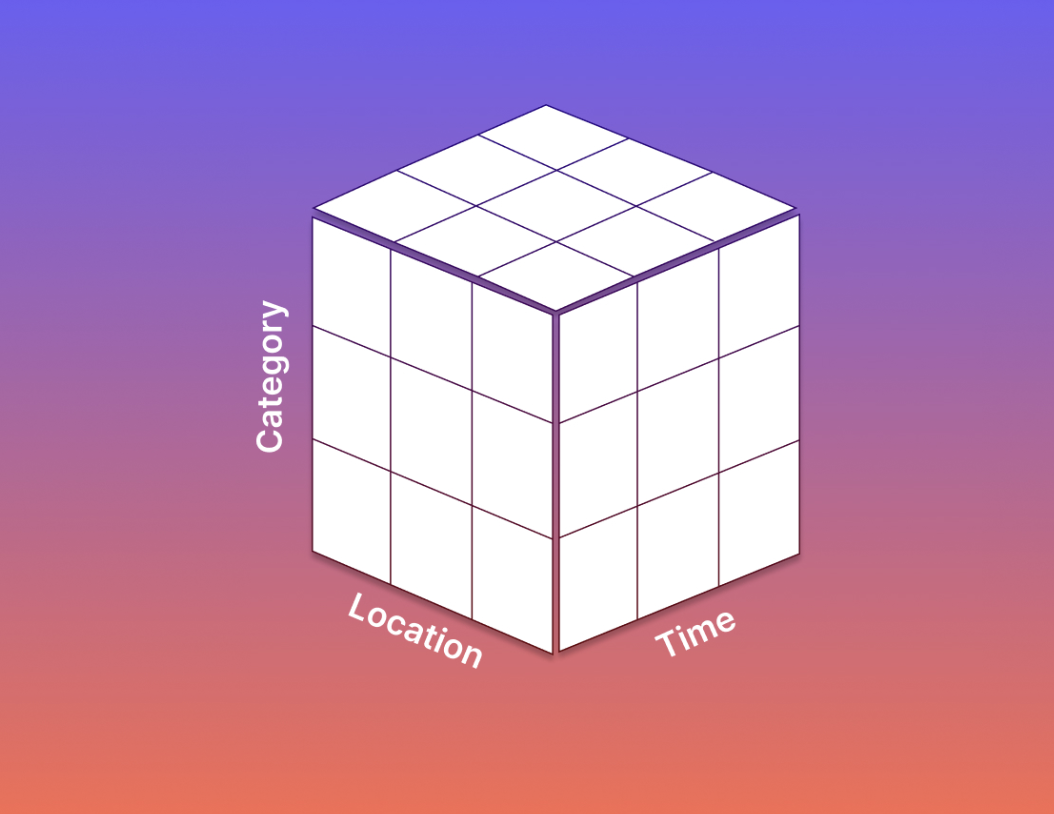

In defense of metric cubes: Just as Kimball-style data modeling streamlined metric computations, metric cubes enabled performant data products.

With the shift to cloud data platforms and elastic storage/compute, two significant behavioral shifts occurred:

- Less rigorous data modeling—write SQL, generate tables/charts

- Compute everything on-demand with the freshest data

Both are appealing due to the reduced maintenance overhead, making platforms simpler and more flexible. However, without rigorous data modeling, you risk chaos downstream as you give more degrees of freedom to transform data. Consistency and reliability in data consistently outweigh full flexibility, which, honestly, very few in the business can truly exploit.

This trend is already reversing. We’ve learned the hard way, and the discipline of data modeling is being revived, with concepts from Kimball gaining new relevance.

Another shift happened, where queries were run on-demand on the freshest data. The appeal here is reduced maintenance and immediate access to the latest data. However, there are two ramifications:

- As data sizes grow, it becomes impossible to meet SLAs for on-demand queries without pre-aggregating or rolling up data anyway.

- Even without prohibitive data sizes, the user experience with on-demand queries inevitably becomes slower, and this slow performance becomes baked into expectations.

This trend is also reversing. New database engines like DuckDB are purpose-built for fast on-demand computations, and there’s a renewed emphasis on rollups and pre-computations at the data modeling layer.

The much-maligned metric cube wasn’t a bad idea. In fact, it captured the common ways metrics were sliced and diced and enabled performant user experiences. I would gladly trade additional maintenance costs upstream to provide a better experience downstream.