As Snowflake runs their annual summit, I’m struck by how young the company still is. At Rent the Runway, we were early adopters, and our data platform journey sheds light on why Snowflake scaled rapidly in the last 5 years along a first wave of products (like FiveTran and dbt), and why a second wave is necessary and imminent.

First, we travel back to 2012. As a young startup, we had to pick a data platform on which we could execute reporting, analytical deep dives, and predictive models. The only word in the data ecosystem was Hadoop, which would have meant rolling our own infra, and stitching together our own system. And here I was, a technology exec with a small data team and an ambitious analytics roadmap, and a small engineering team with an ambitious product roadmap - and now having to build a platform team for data infrastructure and apps.This seemed daunting.

But thankfully talking to peers, I was guided towards columnar data warehouses, and Vertica. Redshift launched soon after as a columnar store on the cloud, and then Snowflake and BigQuery came along. These platforms exploded in usage as every company wanted to collect and leverage its data assets to analyze, measure and drive metrics.

We eventually migrated to Snowflake. But these cloud platforms are still only 5-7 years in the making. The first wave of tooling on these platforms indexed towards the technical data/analyst engineer given the desire to collect and pipe disparate datasets, and prepare them for consumption - but as companies grow - data analysts, scientists, and operators take centerstage in using data to drive the business. Their work in transforming data for various metrics-related use cases is where data ROI gets generated.

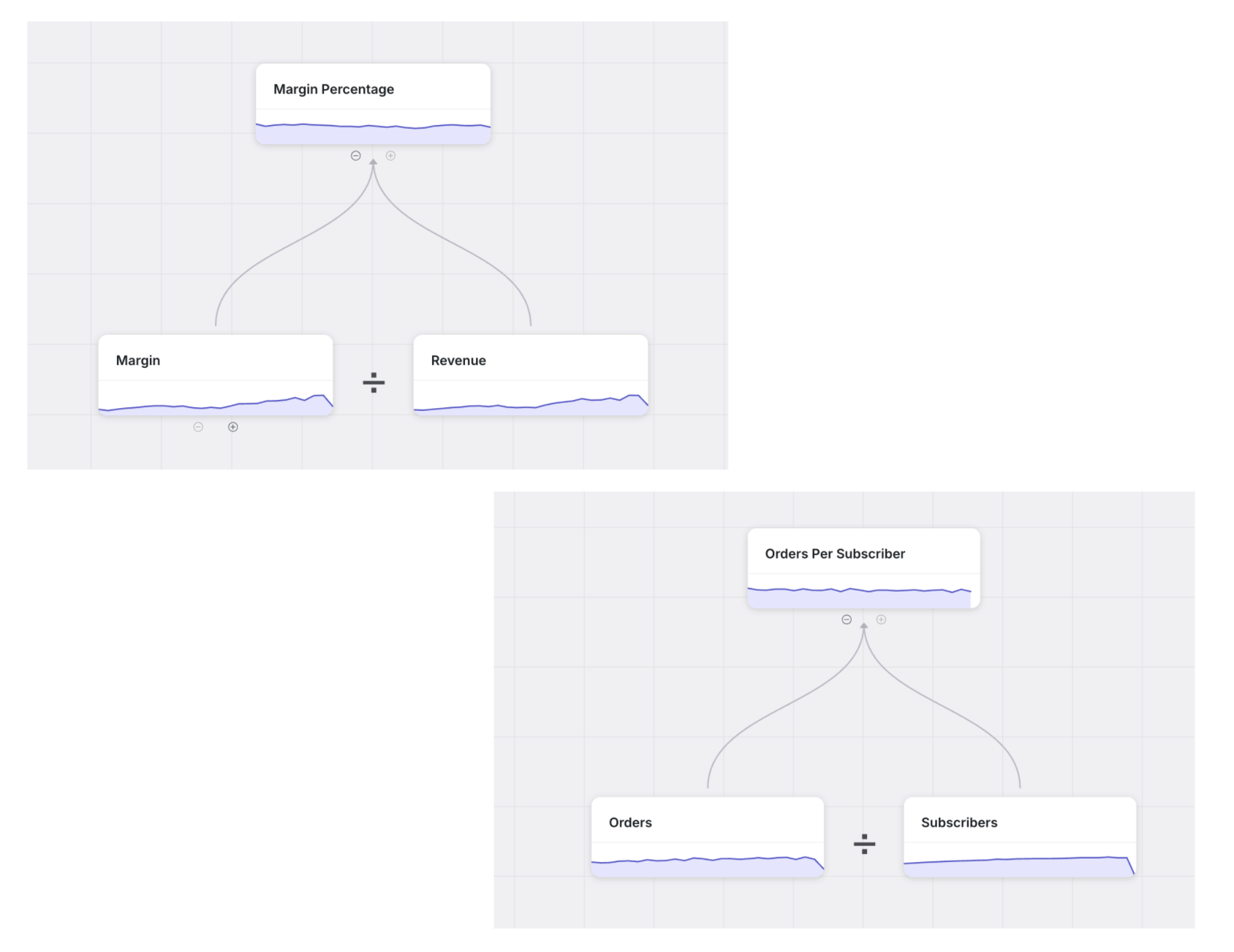

In the next wave, we need to standardize and automate powerful transformation functionality in the hands of analysts and operators. By mapping base datasets to business semantics (metrics, attributes, connections etc), a powerful set of apps could be built - let’s call it the “metrics stack” - which will enable high value data transformations in the hands of the analysts and operators.

Examples include 1) a root causing analysis that shows you which segments moved the metrics 2) running an experiment and viewing all relevant metrics instantly 3) separating the metrics impact of a product initiative vs organic fluctuations 4) running a metric what-if scenario 5) and the one I’m most excited about - being able to understand connections between metrics in a systematic way across all these use cases.

All this and more can be commoditized with the right building blocks on the data platform.Every technological revolution is about finding the right abstractions — simplifying something that was bespoke and specialized via standardization and automation, and the data revolution is no different. I’m excited to see how this Snowflake conference will look in 5 years as this new wave of data products rises.