Many organizations have adopted an Extract, Load, and then Transform (ELT) pattern on the cloud data platforms, attracted to its low friction in getting started, only to find themselves paying the price later as the business gets mired in ad-hoc, spaghetti transformation code. This negatively affects both the quality and velocity of data delivery, and there is growing consensus that stronger business modeling is critical investment.

Thoughtful practitioners like Chad Sanderson and Juan Sequeda have been very vocal about this need for strong business semantic modeling in the data warehouse. They are equally passionate about starting with the value to end users, the applications, the UX, and working backwards to treat data as a product in its own right. These two ideas are tightly coupled - a business semantic infrastructure is only as useful and powerful as the workflows and applications it can power, and in fact, it is the applications that should shape and chisel this infrastructure.

I’ve been on both sides of this data delivery equation - producing data assets as the chief analytics officer, and consuming data outputs as a head of growth. As an example of the value of business semantic modeling, let’s say I’m tracking customer conversion rate as the business is experimenting with different marketing tactics and expanding operations in new regions. To start off, one could write a query that computes conversion rate by channel and region and generate a report.

Alternately, we could model this as a customer conversion rate metric, on the user entity, with dimensional attributes - channel and region. This shift may seem minor but codifying the semantic objects rather than writing a SQL blob provides the flexibility to manipulate the metric definition centrally, create new variants with ease, and add new attributes to slice and dice the metric. An analyst in the product team can define a new attribute on the user entity based on a key action in the first session, or on the promotional offer the user is exposed to, and these attributes can be made instantly available to the growth team.

And this can get more powerful quickly. Let’s start with the report built on the semantics showing conversion rate by channel and region. I look at this report, and want to understand why the conversion rate went down month over month. I could play around with this dashboard, do a bunch of selects/drop-down operations, remember the numbers - maybe write it down on paper(!), and do some calculations to tease out this answer. Or, I can export this to a spreadsheet and do this more precisely.

Or, I can ask just email an analyst with “can you look into why this metric went down?” which, trust me, they love receiving. Now imagine if this operation or transformation can be automated — a piece of software computes and displays how the metric changed across the segments in these two time windows, and enables you to quickly diagnose whether this is driven by the behavior of specific regions or channels, or by just the change in the proportion of users in regions (assuming newer regions have lower conversion rates but are growing in size), or a combination of both.

When an application can reliably answer questions like this with just a few clicks, you have unlocked value not just for the business operator in the growth team, but also for the data analyst who fields dozens of these requests every day. Multiply this by the number of metrics and attributes, the number of business users and analysts involved, and suddenly you are looking at a paradigm shift in analytics and ROI on your data.

Now, this can get even more impactful. What if the product team is reporting that their experiments in the last quarter improved conversion rates in new markets but the overall rate is in fact coming down? A tool that can help control for change in the mix of markets can be enormously valuable in assessing the impact of such experimental initiatives.

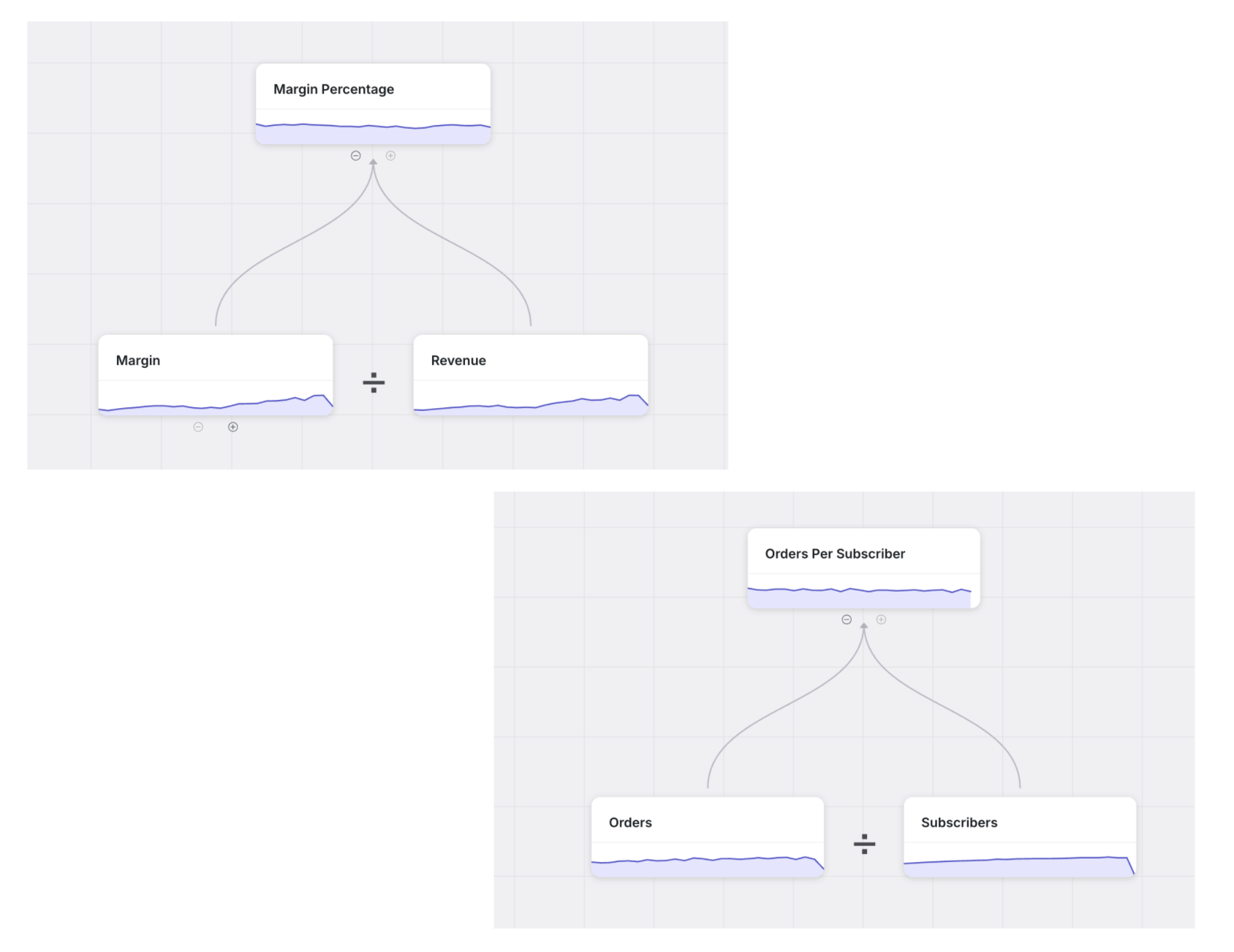

And this can get even more interesting: if I see that newer regions are converting poorly but are attracting a lot of users, I may want to understand how this impacts new customer growth, and consequently, retention, and then overall revenue. Imagine a software application that can intelligently read a semantic model that threads these metrics through the user entity we already defined, and enable this type of connected cascading calculation across different metrics.

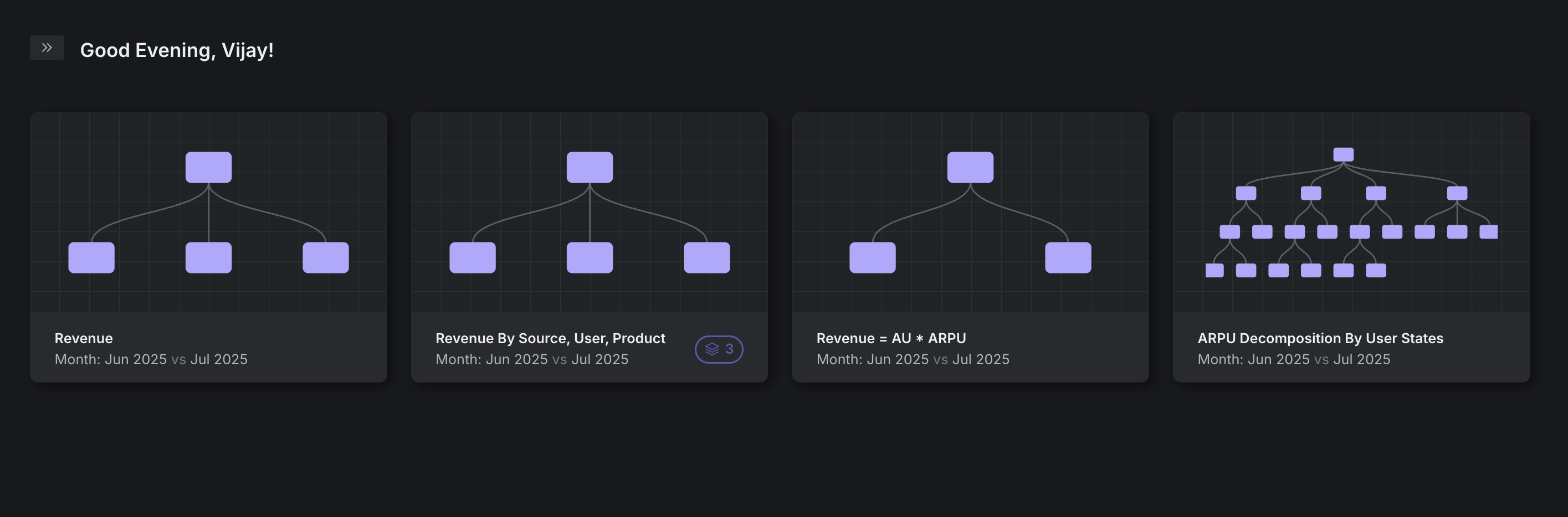

This is the future that data applications can and will enable. Software takes more of the heavy lift, leveraging a business semantic layer to automate common and complex use cases freeing up data operators to focus on higher order work. Personally, I’m excited to build towards this future at Trace.